A short answer is a technique for enhancing the accuracy and reality of generative AI models with facts fetched from external sources.

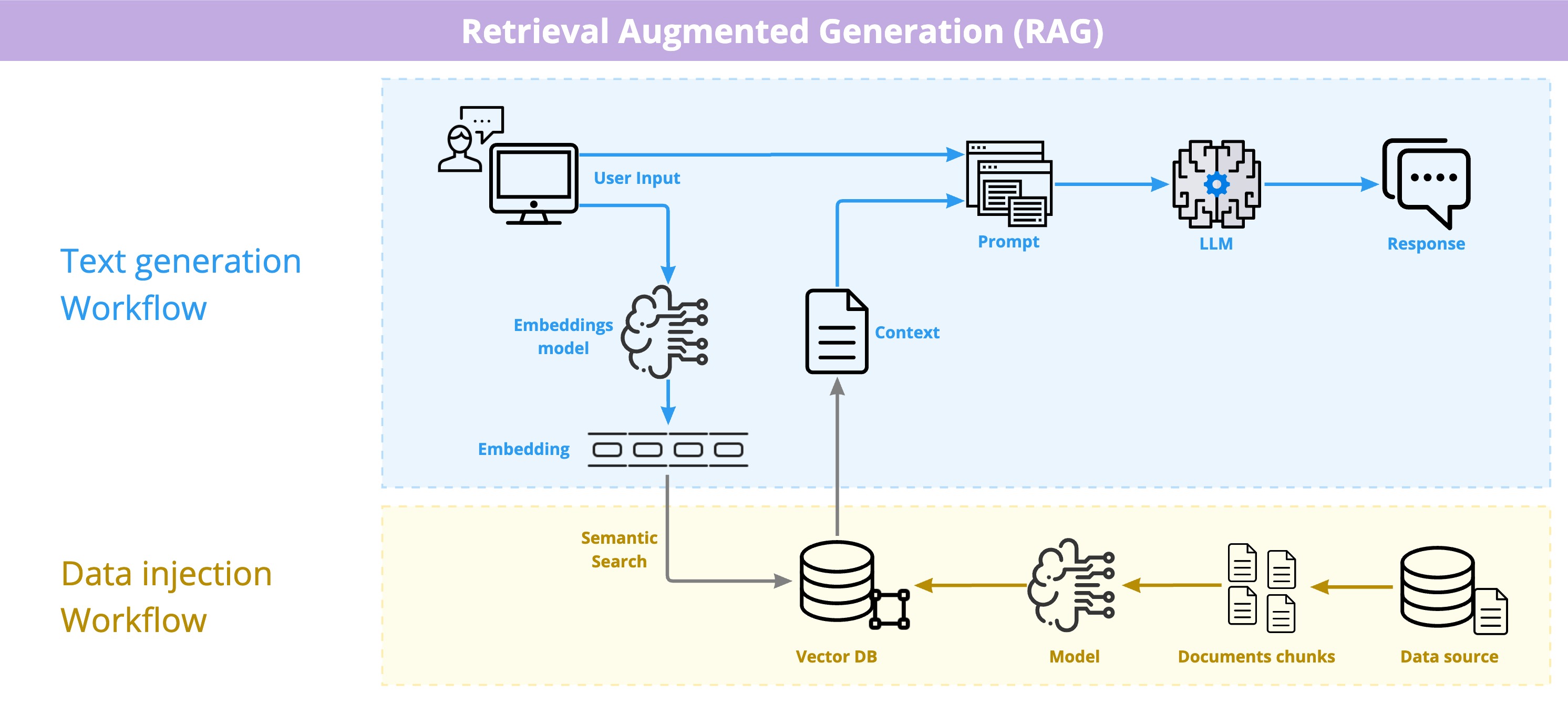

I created an image to represent the flows of the RAG technique:

Imagine that a company has some particular private data that the LLM does not know about. Somehow, this company needs to provide this data as an answer depending on the user’s question. There are other techniques to do this, but in this article, I will focus only on RAG.

Imagine that a company has some particular private data that the LLM does not know about. Somehow, this company needs to provide this data as an answer depending on the user’s question. There are other techniques to do this, but in this article, I will focus only on RAG.

As you can see in the image above, there are two workflows in the RAG. Returning to the company example, to use RAG, this company can provide their data as a data source. We can embed this data in chunks and store it in a vector DB.

Vectors are arrays of numbers that represent complex objects like phrases, words, videos, images, etc., generated by a machine learning model.

This way, we can store this data in a vector DB. When we receive the user’s input, we use the embedding model to embed the input. With this, we can retrieve vectors in the vector DB as high-dimensional points based on semantics or similarity.

If we find something, we return it as a context for our prompt, setting the information previously stored in the vector DB as context in the initial input of the user. Following this, the LLM will be more precise in answering the question based on the private information of this company and give the desired answer.

To finalize, I can say this technique is being widely used, especially because of the limitation of tokens for prompts in some models. With the rise of new models with a huge number of tokens allowed, it is becoming a bit easier to provide much private information directly in the prompt without applying a semantic search through RAG.

I will bring some hands-on examples in a next article or on my YouTube ↗ channel.